AI (TroveLLM)

Clinical intelligence you can trust—at enterprise scale

What it is

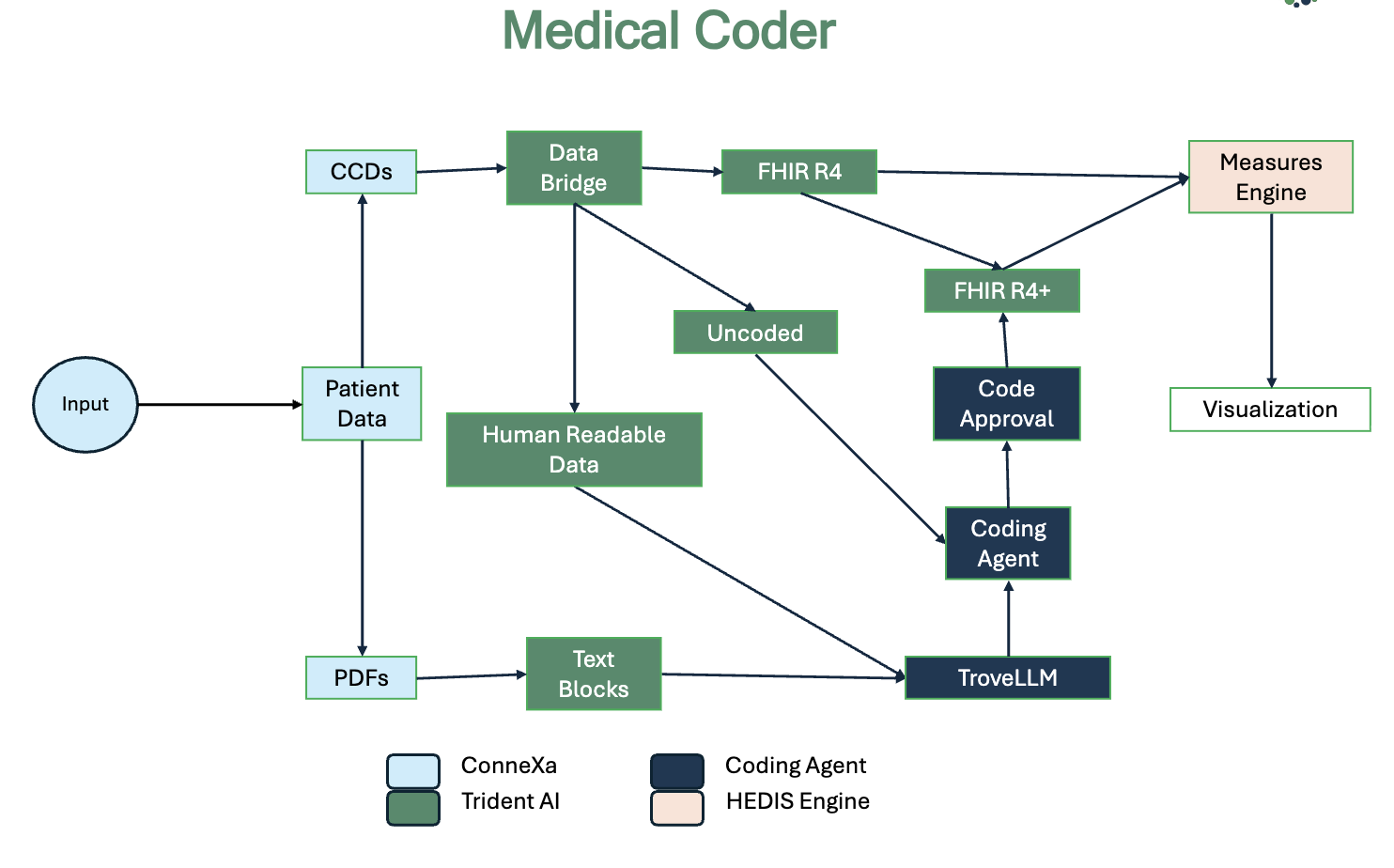

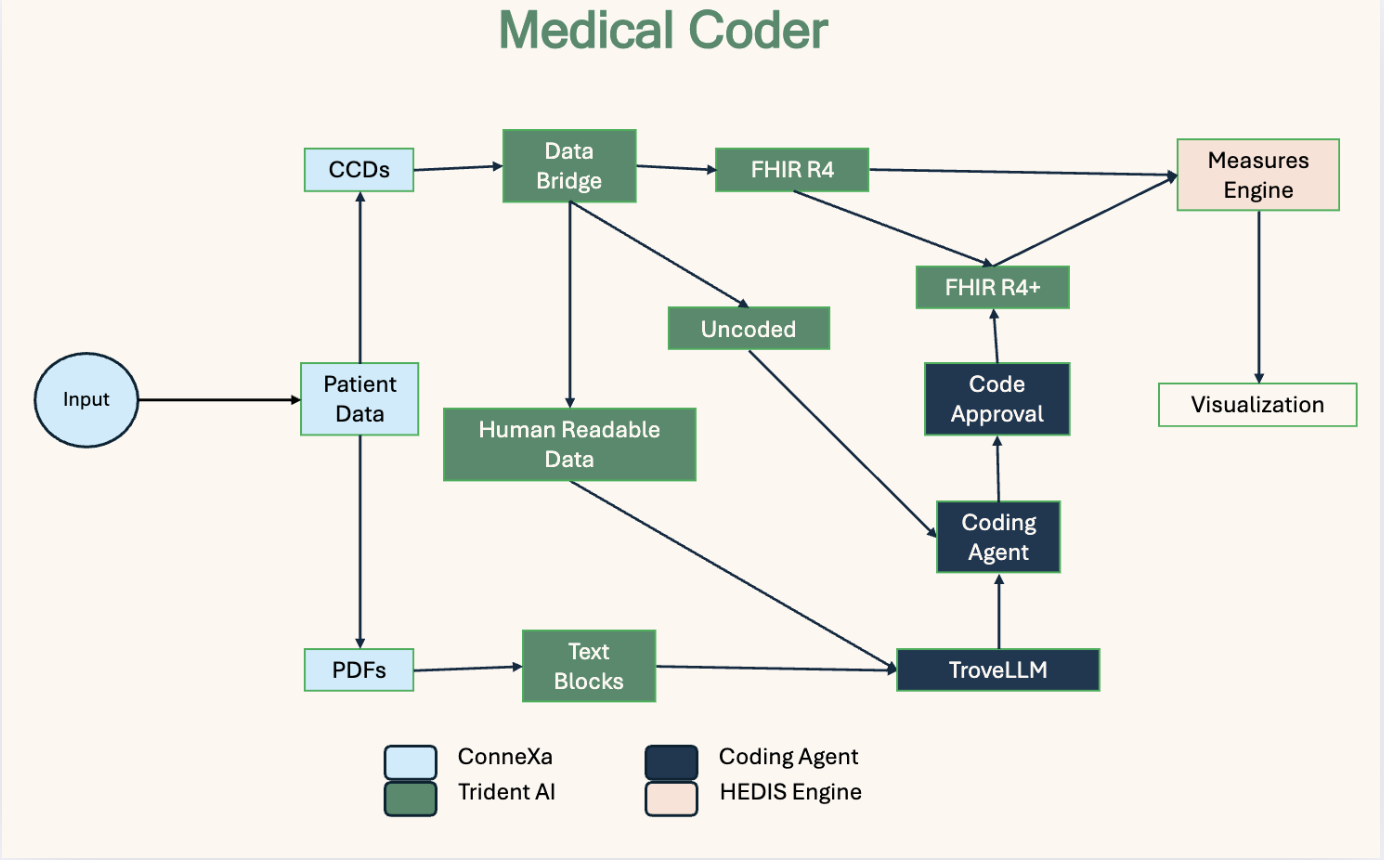

TroveLLM is Trove’s healthcare-native AI and reasoning layer, purpose-built for understanding clinical data—not generic text.

Unlike general-purpose language models, TroveLLM is designed to operate within the constraints and expectations of regulated healthcare environments. It applies clinical context, domain knowledge, and validation to every transformation, ensuring AI outputs are not only intelligent—but reliable, explainable, and safe for production use. TroveLLM enhances every stage of the data pipeline by adding context, reasoning, and transparency.

What TroveLLM does

TroveLLM applies clinical reasoning to unstructured and semi-structured healthcare data to produce structured, standards-ready intelligence.

Core capabilities include:

Extraction of clinical entities from unstructured text and documents

Conversion of PDFs, notes, and narratives into clinical codes

Contextual reasoning based on clinical relationships—not keyword matching

Generation of explainable, auditable outputs suitable for enterprise workflows

This enables organizations to operationalize AI without sacrificing trust or governance.

Supported coding and enrichment

TroveLLM supports industry-standard clinical coding and normalization, including:

ICD-10CM/PCS for diagnoses

SNOMED for clinical concepts

LOINC for labs and observations

LOINC for labs and observations

Clinical concept normalization across sources and formats

Outputs are designed to integrate seamlessly with FHIR, OMOP, and analytics pipelines.

Built for trust and governance

Trust is not optional in healthcare AI. TroveLLM is engineered with governance and oversight at its core.

Key features include:

Confidence scoring for extracted entities and codes

Traceable, reproducible transformations

Human-reviewable outputs for validation and quality control

Design principles aligned with regulated healthcare environments

This allows teams to deploy AI responsibly—without introducing opaque decision-making or compliance risk.

Who it’s for

TroveLLM is designed for teams that require both intelligence and accountability:

Analytics and AI teams building models on clinical data

Risk adjustment and quality programs requiring accurate coding

Clinical automation workflows where reliability is critical

Platforms and enterprises that demand explainability and auditability

Why it matters

In healthcare, AI that cannot be explained cannot be trusted—and AI that cannot be trusted cannot scale.

Uncontrolled or opaque AI introduces:

Compliance risk

Data quality issues

Operational friction

Loss of stakeholder confidence

AI without trust doesn’t scale in healthcare. TroveLLM is built to be accurate first, explainable by design, and intelligent where it counts—making it suitable for enterprise deployment across regulated healthcare workflows.

Part of the Trove Platform

TroveLLM works seamlessly with Trove Connectivity, Parsing, and Standards outputs to deliver end-to-end clinical data intelligence.

This is AI designed for healthcare realities—not demos.

Get in Touch

Interested? Get in touch with us today so we can find a way to work together.

.png)

.png)